Networking#

In-cluster networking#

k0s supports two CNI providers out-of-box plus the ability to bring your own CNI config.

k0s uses Kube-router as the default, built-in network provider. It allows setting up CNI networking without any overlays by utilizing BGP as the main mechanism for in-cluster networking:

A key design tenet of Kube-router is to use standard Linux networking stack and toolset. There is no overlays or SDN pixie dust, but just plain good old networking.

k0s also supports Calico as alternative, built-in network provider. Calico is a container networking solution making use of layer 3 to route packets to pods. It supports for example pod specific network policies helping to secure kubernetes clusters in demanding use cases. Calico uses vxlan overlay network by default. Also ipip (IP-in-IP) is supported by configuration.

When deploying k0s with the default settings, all pods on a node can communicate with all pods on all nodes. No configuration changes are needed to get started.

It is possible for a user to opt-out of k0s managing the network setup. Users are able to utilize any network plugin following the CNI specification. By configuring custom as the network provider (in k0s.yaml) it is expected that the user sets up the networking. This can be achieved e.g. by pushing network provider manifests into /var/lib/k0s/manifests from where k0s controllers will pick them up and deploy into the cluster. More on the automatic manifest handling here.

Notes for network provider selection#

The main difference between Kube-Router and Calico network providers is the layer they operate on. In short, kube-router operates on Layer 2 where Calico operates on Layer 3.

Few points for each providers to take into consideration.

Kube-router: - supports armv7 (among many other archs) - uses bit less resources (~15%) - does NOT support dual-stack (IPv4/IPv6) networking - does NOT support Windows nodes

Calico: - does NOT support armv7 - uses bit more resources - does support dual-stack (IPv4/IPv6) networking - does support Windows nodes

Note: After the cluster has been initialized with one network provider, it is not currently supported to change the network provider. Changing network provider requires full re-deployment of the cluster.

Controller(s) - Worker communication#

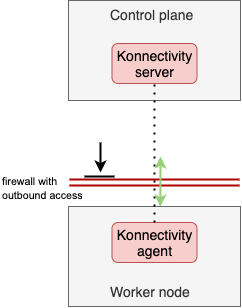

As one of the goals of k0s is to allow deployment of totally isolated control plane we cannot rely on the fact that there is an IP route between controller nodes and the pod network. To enable this communication path, which is mandated by conformance tests, we use Konnectivity service to proxy the traffic from API server (control plane) into the worker nodes. Possible firewalls should be configured with outbound access so that Konnectivity agents running on the worker nodes can establish the connection. This ensures that we can always fulfill all the Kubernetes API functionalities, but still operate the control plane in total isolation from the workers.

Needed open ports & protocols#

| Protocol | Port | Service | Direction | Notes |

|---|---|---|---|---|

| TCP | 2380 | etcd peers | controller <-> controller | |

| TCP | 6443 | kube-apiserver | Worker, CLI => controller | authenticated kube API using kube TLS client certs, ServiceAccount tokens with RBAC |

| TCP | 179 | kube-router | worker <-> worker | BGP routing sessions between peers |

| UDP | 4789 | Calico | worker <-> worker | Calico VXLAN overlay |

| TCP | 10250 | kubelet | Master, Worker => Host * |

authenticated kubelet API for the master node kube-apiserver (and heapster/metrics-server addons) using TLS client certs |

| TCP | 9443 | k0s-api | controller <-> controller | k0s controller join API, TLS with token auth |

| TCP | 8132,8133 | konnectivity server | worker <-> controller | konnectivity is used as "reverse" tunnel between kube-apiserver and worker kubelets |